Horizontal Scaling of a Monolith Service

Less change in code, more change in architecture design

💡 Less change in code, more change in architecture design

Behind the Story

Early 2019 I helped out a team where I looked over a service that had some technical challenges. The most important of those are,

APIs were becoming slow with high traffic

API performance was still slow after doing multiple vertical scaling

They asked me to help out them to find any kind of solution since they are paying a higher bill with zero performance improvement and traffics are increasing every day :( .

Initial Problems:

Let’s say they had a server called ServX at the beginning. After getting access in their system what I saw,

Their DB is executing lots of similar queries.

DB queries are taking time for joining in large tables

They had no caching method

They were running API and database in ServX

Their images were being stored in and served from ServX

Their admin panel was running in ServX

Their background process jobs were running in ServX

Their CPU usages are very high

ServX disk i/o is very high

Even if CPU and memory usages were low the API response’s were slow for concurrent requests

Initial thoughts:

They are running lots of similar queries. Do they need a caching server, maybe Redis?

Maybe it’s not a CPU or memory issue? it’s a hard-drive issue since all services/servers are using the same ServX.

Do we rewrite the system part by part to support micro-services?

But at that moment they do not have enough time or resources to rewrite the services and support the increasing traffic in the existing system.

So we took some series of scale-up actions for the monolith service.

Scaling 101 — implement caching:

Process:

So we decided to implement caching to reduce DB query loads. so what we did is

Set up a Redis cache in ServX

Added straight 2–7 min caching mechanism in major endpoints

Output:

DB queries decreased significantly

API response improved

Concurrent requests are making the same issues as before

After a certain time API behaving as same as before, means performance improved but not satisfying

Scaling 102 — separate DB server:

Process:

Create a new DB server dbX

Move the current data from the ServX to dbX

Change the DB related info in ServX and killed the DB server in ServX.

Output:

ServX CPU, memory loads decreased

ServX API response increased

ServX disk i/o is decreased a little bit

Still issue with concurrent traffic response

Scaling 103 — separate Redis server:

Process:

install Redis server in dbX

change the caching related info in ServX and killed the Redis server in ServX.

Output:

ServX CPU, memory loads decreased

ServX API response increased much much better than before

dbX disk i/o increased a bit but not alarming

Still issue with concurrent traffic response

Scaling 104 — separate file server:

Process:

Create a server MiniX to setup minio.

Change in code to support amazon s3 file upload, since minio integration is the same as s3 file upload

Move the existing files to MiniX and setup proper ACL

Change the URLs in DB

Remove the files from local storage

Output:

ServX CPU, memory loads decreased

ServX API response increased

ServX disk i/o decreased significantly

Issues with concurrent traffic response still there but much much less

Scaling 105 — separate APIs from utils tasks:

process:

create a server AdminX

Move the admin panel from ServX to AdminX

Move background tasks from ServX to AdminX

Kill admin panel and background tasks in ServX

output:

ServX CPU, memory loads decreased

ServX API response increased

Issues with concurrent traffic response reduced to almost normal

Scaling 106 — add load-balancer:

Now it’s time to add load balancer since all dependencies are separated into multiple servers.

process:

Create a load balancing server LoadX using Nginx

Take a backup for ServX

Create 6 servers from the ServX backup

Map those 6 servers IP as upstream servers in LoadX configuration

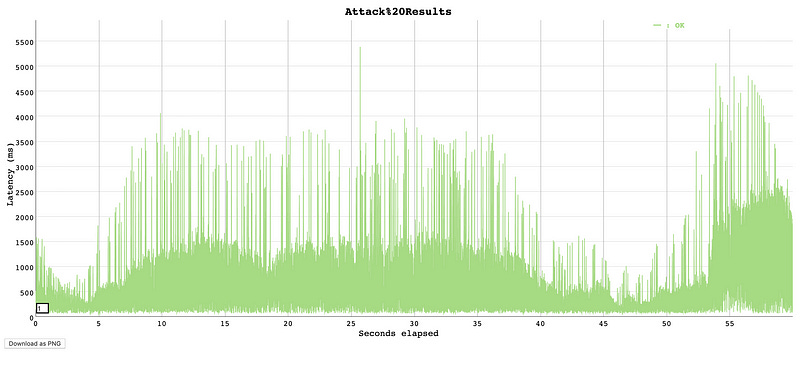

output:

API responses change drastically.

concurrent traffic response reduced like magic.

Final Result:

API performance improved from 130 sec to 100–350 ms

very little changes in codebases compared to performance output

Server cost reduced to 70%

You may think we added so much server’s still cost reduced to 70%? We used very low configured servers since I planned to reduce loads of a high powered server to multiple low powered servers.

Basically we moved from vertical scaling to horizontal scaling! This allows us to handle much more traffics with less costly resources

🔗 Originally written here